DSM-G600, DNS-3xx and NSA-220 Hack Forum

Unfortunately no one can be told what fun_plug is - you have to see it for yourself.

You are not logged in.

Announcement

IRC Channel #funplug on irc.freenode.org

#1 2007-03-10 00:47:48

- woody

- Member

- Registered: 2007-03-05

- Posts: 31

DNS-323 performance

What kind of file transfer performance should I expect? I've run a few test copying from dns323 to my pc i'm getting ~9 mb/s

size time mb/s

28082608 3.14 8.53

28082608 2.83 9.46

28082608 2.8 9.56

28082608 2.78 9.63

Avg 9.30

My network is all switched/wire (10/100). Does that seem about right? It seems like it 'felt' a little snappier with my old fileservers.

Woody

Offline

#2 2007-03-10 12:04:08

Re: DNS-323 performance

Here you can find DSM-G600 performance.

http://dns323.kood.org/forum/p1539-2007 … html#p1539

Someone might run nbenc on DNS-323 so I can add it to on both wiki's

http://dns323.kood.org/dsmg600/information:benchmarks

• DSM-G600 - NetBSD hdd-boot - 80GB Samsung SP0802N

• NSA-220 - Gentoo armv5tel 20110121 hdd-boot - 2x 2TB WD WD20EADS

Offline

#3 2007-03-10 13:39:15

Re: DNS-323 performance

my results:

BYTEmark* Native Mode Benchmark ver. 2 (10/95)

Index-split by Andrew D. Balsa (11/97)

Linux/Unix* port by Uwe F. Mayer (12/96,11/97)

TEST : Iterations/sec. : Old Index : New Index

: : Pentium 90* : AMD K6/233*

--------------------:------------------:-------------:------------

NUMERIC SORT : 127.36 : 3.27 : 1.07

STRING SORT : 9.3477 : 4.18 : 0.65

BITFIELD : 2.9155e+07 : 5.00 : 1.04

FP EMULATION : 8.9321 : 4.29 : 0.99

FOURIER : 27.395 : 0.03 : 0.02

ASSIGNMENT : 1.3099 : 4.98 : 1.29

IDEA : 365.53 : 5.59 : 1.66

HUFFMAN : 77.131 : 2.14 : 0.68

NEURAL NET : 0.041329 : 0.07 : 0.03

LU DECOMPOSITION : 1.2549 : 0.07 : 0.05

==========================ORIGINAL BYTEMARK RESULTS==========================

INTEGER INDEX : 4.001

FLOATING-POINT INDEX: 0.062

Baseline (MSDOS*) : Pentium* 90, 256 KB L2-cache, Watcom* compiler 10.0

==============================LINUX DATA BELOW===============================

CPU :

L2 Cache :

OS : Linux 2.6.6-arm2

C compiler : gcc version 3.3.5 (Debian 1:3.3.5-13)

libc : ld-2.3.2.so

MEMORY INDEX : 1.000

INTEGER INDEX : 1.000

FLOATING-POINT INDEX: 0.031

Baseline (LINUX) : AMD K6/233*, 512 KB L2-cache, gcc 2.7.2.3, libc-5.4.38

* Trademarks are property of their respective holder.

Offline

#4 2007-03-11 01:22:30

- fordem

- Member

- Registered: 2007-01-26

- Posts: 1938

Re: DNS-323 performance

It's going to get really confusing if we don't use the same units - can we agree on 9 MB/s as 9 MegaBytes/sec and not 9 megabits/sec.

On a 100 mb/s LAN I get around 69 mb/s (that's 69 megabit/sec or about 8.5 MegaBytes/sec) and roughly double that on a gigabit LAN - read speeds are perhaps 10-15% higher.

These are using a 2 GByte test file transferred using a Windows explorer copy & paste and the numbers are extracted from my network switch using SNMP - for reference purposes max throughput observed on 100 mbps is 98 mbps, and on gigabit, 239 mbps, so I believe the figures above are the limits of the DNS323 itself.

Last edited by fordem (2007-03-11 02:04:36)

Offline

#5 2007-03-11 01:58:57

Re: DNS-323 performance

Here's an overview of the standardized units: http://physics.nist.gov/cuu/Units/binary.html

MB = 1.000.000 bytes

MiB = 1.024 * 1.024 bytes

Mbit = 1.000.000 bits

...

Offline

#6 2007-03-11 04:56:51

- phoenix

- Member

- From: Germany

- Registered: 2007-03-11

- Posts: 7

Re: DNS-323 performance

Network: 323 and host computer plugged into the same gigabit ethernet switch (dlink DGS-1008D)

Drives: two Samsung 7200rpm/16mb/500GB in RAID1 (mirror) mode, ext2 file system

Read speed while checksumming large files on the host computer (i.e. md5sum Z:\my_archive.zip): 15-20MB/sec

(Yes, that is megabytes per second and showing between 15 and 17% network usage in taskmanager)

(Host computer CPU is at 70-80% load, so the limit is either the PCI-bus of the host OR in fact the dlink dns-323)

Write speed copying a large file to the 323 is 5~11MB/sec (megabytes per second again).

But the host PC's drive is pretty slow (3yo 5400rpm/120GB Samsung), so I don't think I've yet reached the limits of the DNS-323.

Not bad for a networked drive, I'd say. An USB-attached drive shouldn't be much faster, as we're at the mechanical limits of the disks, I think.

I'm still figuring out the large file bug, so I'll probably repost some benchmarks in the next days while copying and md5summing ![]()

Last edited by phoenix (2007-03-11 04:58:35)

Offline

#7 2007-03-12 07:43:30

- mig

- Member

- From: Seattle, WA

- Registered: 2006-12-21

- Posts: 532

Re: DNS-323 performance

phoenix wrote:

Not bad for a networked drive, I'd say. An USB-attached drive shouldn't be much faster, as we're at the mechanical limits of the disks, I think.

Wikipedia has a chart http://en.wikipedia.org/wiki/Serial_ATA … ther_buses which lists various hard drive bus technologies as well as the SATA II specification. 15-20MB/sec is well below "the mechanical limits of the disks".

There is a web site smallNetBuilder http://www.smallnetbuilder.com/content/ … 71/75/1/7/ that has a review of the DNS-323 on page 8 of the review they list performance specification, and a description of how snallNetBuilder tested the DNS-323 system.

Using the smallNetBuilder test format, I get ~15MegaBytes/sec and ~20MegaBytes/sec read on a gigabit network from a winXP machine using the default samba (DNS specs in my signature).

Last edited by mig (2007-05-12 02:29:48)

DNS-323 • 2x Seagate Barracuda ES 7200.10 ST3250620NS 250GB SATAII (3.0Gb/s) 7200RPM 16MB • RAID1 • FW1.03 • ext2

Fonz's v0.3 fun_plug http://www.inreto.de/dns323/fun-plug

Offline

#8 2007-03-14 09:40:03

Re: DNS-323 performance

levring wrote:

my results:

• DSM-G600 - NetBSD hdd-boot - 80GB Samsung SP0802N

• NSA-220 - Gentoo armv5tel 20110121 hdd-boot - 2x 2TB WD WD20EADS

Offline

#9 2007-05-11 11:51:32

- raoul.grasselli

- Member

- Registered: 2007-04-14

- Posts: 18

Re: DNS-323 performance

configuration:

DNS-323 with Hitachi Deskstar T7K250 250GB (formatted on EXT2) firmware 1.01 (factory)

D-Link DGS-1005D - Switch Gbit

External HDD on usb 2.0

transferring over 100GB of multimedia files about 700MB each files from usb2 drive to DNS-323

i registered a transfer rate of (writing to DNS-323) of 140Mb/s (read from i-band usr)

the flow work from a minimum of 135 to a maximun of 143 Mb/s

Last edited by raoul.grasselli (2007-05-11 11:52:10)

Offline

#10 2007-05-12 00:12:15

- fordem

- Member

- Registered: 2007-01-26

- Posts: 1938

Re: DNS-323 performance

mig wrote:

phoenix wrote:

Not bad for a networked drive, I'd say. An USB-attached drive shouldn't be much faster, as we're at the mechanical limits of the disks, I think.

Wikipedia has a chart http://en.wikipedia.org/wiki/Serial_ATA … ther_buses which lists various hard drive bus technologies as well as the SATA II specification. 15-20MB/sec is well below "the mechanical limits of the disks".

That chart lists the bus transfer speeds, which are in no way related to the mechanical limits of the disks.

The mechanical limits of the disks are primarily determined by the rotational speed and the number of sectors per track - old style disks with 17 x 512 byte sectors per track spinning at 7,200 rpm would have had a transfer speed of around 1 MB/sec, newer disks do not have the same fixed CHS (cylinder/head/sector) structure, instead the number of sectors per track varies from the inner cylinders to the outer cylinders, causing the transfer speed to vary with the head position.

Offline

#11 2008-09-17 00:19:41

- Spud387

- New member

- Registered: 2008-09-17

- Posts: 1

Re: DNS-323 performance

What is everyone using to test their network transfer speeds? I kind want something that will be comparable across the 3 different types of machines

I have 2 vista PCs, 3 XP machines & 1 OSX machine in my house and I am trying to determine their transfer speeds based on where they are located in the house.

I am trying to do this to determine if the current transfer slowdowns are caused by crosstalk in the cabling based on its location.

thx

Offline

#12 2008-09-18 16:45:17

Re: DNS-323 performance

Offline

#13 2009-12-20 20:11:19

- rh

- New member

- Registered: 2009-12-20

- Posts: 2

Re: DNS-323 performance

Let's raise an old topic.

While upgrading my disks, I did a little throughput test to determine optimum setting for my use. I hope others find my results interesting.

I run an all linux network. Sometimes I boot to Xp for gaming. Only performance issues so far had been when watching bluray images over network, so I tested throughput of single 1GB file. I also updated firmware to 1.08beta8. First two questions were if enabling jumbo frames would give a performace boost, and which network filesystem, nfs or samba, is faster. All test were run on ext3 filesystem with disks as two seperate volumes.

nfs w/ jumbo frames read 18,4 MB/s, write 6,9 MB/s

nfs w/o jumbo frames read 19,7 MB/s, write 12,1 MB/s

samba w/ jumbo frames read 12,4 MB/s, write 837 kB/s

samba w/o jumbo frames read 12,9 MB/s, write 834 kB/s

Poor samba performance on writes were likely caused by a bug in linux CIFS driver. On XP I got about 16 MB/s read and 13 MB/s write with samba. Negative impact of jumbo frames were propably because of buggy desktop hardware or router. Enabling jumbo frames on desktop PC caused file transfers to hang, so jumbo frames were enabled only on DNS323. On a network with all hardware supporting jumbo frames results might have been different. I decided to go with nfs and without jumbo frames enabled.

Next question was, what kind of performace impact does ext3 filesystem have on DNS-323 in comparison to ext2.

Ext2 read 20,0 MB/s, write 15,4 MB/s

Ext3 read 19,7 MB/s, write 12,1 MB/s

Last question was, how much does performace change when disks are in two seperate volumes, JBOD, Raid0 or Raid1 configurations. All test were run on ext2 filesystem.

2 vols read 20,0 MB/s, write 15,4 MB/s

JBOD read 19,0 MB/s, write 15,5 MB/s

Raid0 read 20,9 MB/s, write 15,3 MB/s

Raid1 read 19,4 MB/s, write 13,5 MB/s

After these results I decided to go with nfs on ext3 formated drives configured on two seperate volumes.

Offline

#14 2009-12-22 15:07:31

- idallen

- New member

- Registered: 2009-12-22

- Posts: 2

Re: DNS-323 performance

The DNS-323 only pulls a maximum of 32MB/sec off the disk internally (via hdparm -t /dev/sda).

That will set the upper bound on any networked performance. Is that really the best the hardware can do?

Offline

#16 2010-07-13 05:04:18

- anodyne

- New member

- Registered: 2009-04-26

- Posts: 3

Re: DNS-323 performance

Did some testing for my own interest to find the performance hit of EXT3 vs EXT2. I also decided to compare individual disk vs RAID0 & RAID1. And I did it with two types of workload: one large file (~400MB) and multiple (10000+) small files (~900MB total). This is with a 2.66GHz P4 system (PATA disks) running XP on a gigabit network, standard frames. I'm connecting to the DNS via Samba. Here are the summarised results (values are transfer rates in MB/sec):

1) Single large file

Individual RAID0 RAID1

Ext2 filesystem 12.4 12.7 12.1

Ext3 filesystem 10.4 10.2 9.5

2) Multiple small files

Individual RAID0 RAID1

Ext2 filesystem 2.5 2.5 2.4

Ext3 filesystem 2.3 2.3 2.2

Conclusion: in this environment, EXT3 is 10-20% slower. RAID1 has a slight performance penalty. And there's no benefit to RAID0.

Offline

#17 2010-07-13 14:19:49

- fordem

- Member

- Registered: 2007-01-26

- Posts: 1938

Re: DNS-323 performance

anodyne wrote:

Did some testing for my own interest to find the performance hit of EXT3 vs EXT2. I also decided to compare individual disk vs RAID0 & RAID1. And I did it with two types of workload: one large file (~400MB) and multiple (10000+) small files (~900MB total). This is with a 2.66GHz P4 system (PATA disks) running XP on a gigabit network, standard frames. I'm connecting to the DNS via Samba. Here are the summarised results (values are transfer rates in MB/sec):

1) Single large file

Individual RAID0 RAID1

Ext2 filesystem 12.4 12.7 12.1

Ext3 filesystem 10.4 10.2 9.5

2) Multiple small files

Individual RAID0 RAID1

Ext2 filesystem 2.5 2.5 2.4

Ext3 filesystem 2.3 2.3 2.2

Conclusion: in this environment, EXT3 is 10-20% slower. RAID1 has a slight performance penalty. And there's no benefit to RAID0.

Well done!!!

Well thought through and well written - many people have done their own tests, but the test methodology and the write up were so poor, that the numbers were not really suited to anything other than a "mine is faster than yours' comparision.

I note you say you were connecting to the DNS via SAMBA and interpret this to mean that you were running linux on the P4 system - I vaguely recall a couple of threads that suggest the DNS-323 does not perform as well in this environment as it does in a Windows environment.

Offline

#18 2010-07-14 01:15:40

- skydreamer

- Member

- From: At the Atlantic Coast

- Registered: 2007-01-06

- Posts: 232

Re: DNS-323 performance

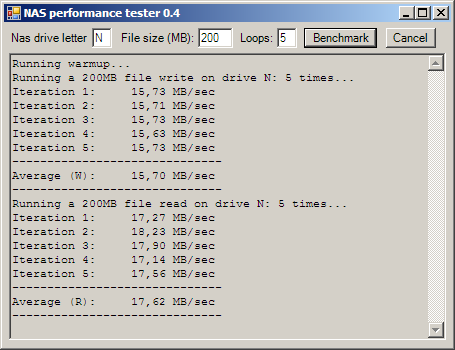

Another test- RAID1 on a standard CentOS 5.5 machine in comparison to DNS-323 in RAID1 on the same LAN with 9K jumbo frames. The test has been launched on a PC running W7/64bit:

Running warmup...

Running a 200MB file write on drive z: 5 times...

Iteration 1: 83.85 MB/sec

Iteration 2: 74.87 MB/sec

Iteration 3: 68.23 MB/sec

Iteration 4: 84.21 MB/sec

Iteration 5: 85.61 MB/sec

------------------------------

Average (W): 79.36 MB/sec

------------------------------

Running a 200MB file read on drive z: 5 times...

Iteration 1: 72.17 MB/sec

Iteration 2: 72.91 MB/sec

Iteration 3: 77.45 MB/sec

Iteration 4: 77.58 MB/sec

Iteration 5: 77.28 MB/sec

------------------------------

Average (R): 75.48 MB/sec

------------------------------

Running warmup...

Running a 200MB file write on drive z: 5 times...

Iteration 1: 18.33 MB/sec

Iteration 2: 18.7 MB/sec

Iteration 3: 18.75 MB/sec

Iteration 4: 18.52 MB/sec

Iteration 5: 18.06 MB/sec

------------------------------

Average (W): 18.47 MB/sec

------------------------------

Running a 200MB file read on drive z: 5 times...

Iteration 1: 28.2 MB/sec

Iteration 2: 28.87 MB/sec

Iteration 3: 28.49 MB/sec

Iteration 4: 30.19 MB/sec

Iteration 5: 30.38 MB/sec

------------------------------

Average (R): 29.23 MB/sec

------------------------------

Offline